This most recent sprint was one of the most challenging but fulfilling sprints yet!

The end of this sprint coincided with the final submission deadline for the Oculus Launchpad program, so it was time to finish the project’s remaining essential features while also building out promotional materials and responding to playtest notes. Needless to say, I didn’t sleep much. 😊

The Oculus Launchpad program only distributes grants to a handful of its more than 100 participants based on the merit of their vertical-slices, so my demo had to be looking and playing its best. I didn’t have the money to continue building Jukebox Beatdown beyond my vertical slice, so the project’s survival depended on the grant.

For my project to be eligible for Oculus’s consideration, it had to meet the following requirements:

Provide a complete experience in five minutes or less.

Meet the Oculus Store’s technical standards, especially in terms of performance.

Be accompanied by at least a basic press kit.

In this post, I’ll discuss how I reached each of these goals, which are divided nicely into the categories of Design, Engineering, and Marketing:

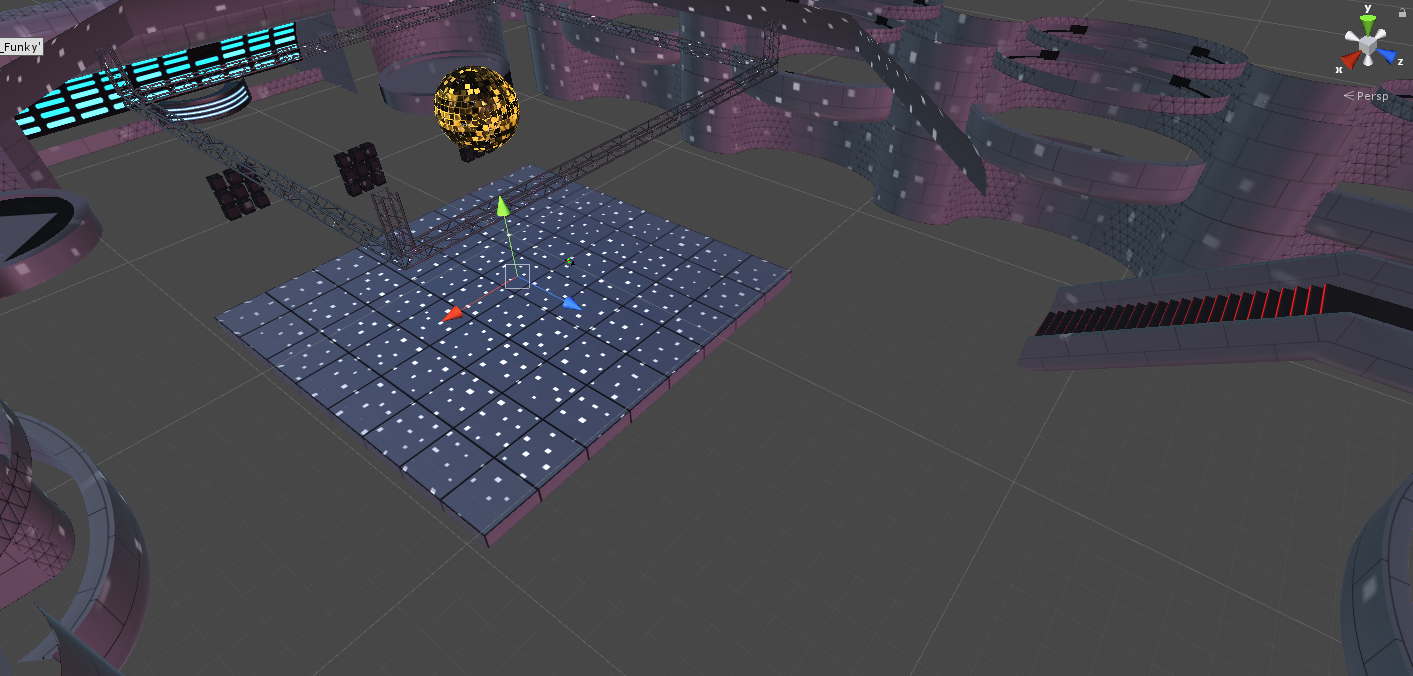

DESIGN: Building the game’s five-minute demo by scripting the attacks of Funky Franny, the game’s first boss. By doing so, we hoped to achieve the game’s central promise of boss-fights set to music.

ENGINEERING: Optimizing the game so that it met the Oculus Store’s technical requirements (and didn’t trigger VR-nausea).

MARKETING: Building a professional and exciting presence for the game online through my press kit.

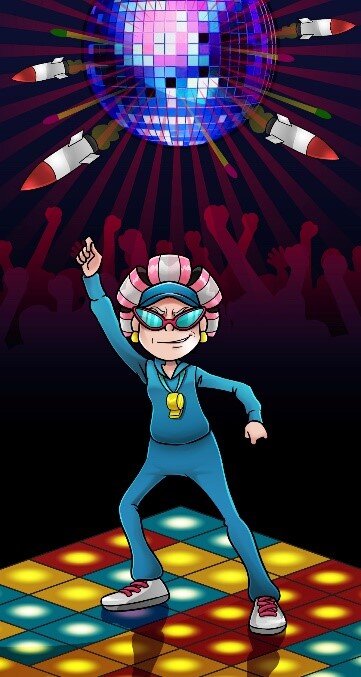

The final banner art for the project.

Design

This was far and away the most fun sprint of this game’s production because I finally had the technical foundation to fulfill the game’s central promise: boss battles set to music.

Like the movie Fantasia, we wanted all the bosses in Jukebox Beatdown to have their actions choreographed to music.

This was an intimidating goal. The song I commissioned for this project had multiple saxophone, horn, drum, synth, bass, guitar, string, and keyboard tracks each with either a unique melody or rhythm. It was a bit overwhelming to decide which sound would be paired with which attack.

To make this process easier, Ben Young, the game’s composer, designed our song so that it had four distinct, self-contained phases. We thought that these self-contained phases would make it easier for the player to notice when the music matched with the action of the game.

A screenshot of the Ableton project for the game’s song.

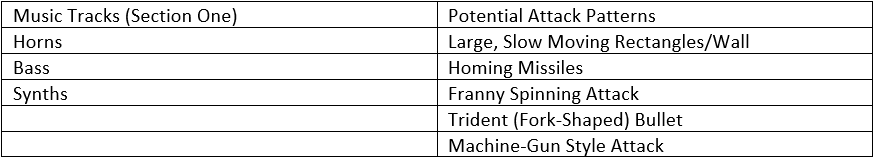

To cut through the noise (pardon the pun), I made a list with two columns for each of these four sections. One column had all the tracks in that section (saxophone, guitar 1, guitar 2, etc.) and the other column had a list of attacks I could create for the game. This list was an anthology of riffs on attacks I had seen in other games with great bosses, such as Dark Souls 3, Cuphead, Titan Souls, and Furi, plus some inventions of my own.

From there, it became a jigsaw puzzle of music, visuals, and gameplay. Using the music, which was basically set in stone, as a starting point, I tried to pair the attacks and music together. The design process was complex and went like this: what fits a guitar melody better, a flurry of lasers or a series of rapidly-exploding bombs? If I tie the lasers to the guitar, that means I can’t use them for the saxophone, so what should I tie into the saxophone? If the lasers are more difficult than the bombs, maybe they should be with the saxophone, which comes in predominantly at the end of the song – but now I am using the bombs for the horns, so what should go with the guitar now? Moving one element displaced another.

This process of experimentation continued until we had built out the first several phases of Franny’s fight:

However, now that we had added all this new content, our framerate had dropped into the teens. It was time to put our engineering hat on and optimize the project.

Engineering (Optimization)

From an engineering perspective, VR development can be one of the most challenging forms of game development. One of the main reasons that VR development is so challenging is that the slightest amount of lag can make someone sick. If you’ve ever played a new game on older hardware, you’ve probably noticed the game’s graphics lagging for a moment before updating to where they should be. This is never desirable but is especially unwanted in a VR context because the slightest amount of lag can cause the player to get nauseous.

The dangers of lag.

People get nauseous when the stimuli that their eyes are receiving does not match the stimuli that the vestibular system in their ear is receiving. (The vestibular system gathers and transmits data about our body’s position through several organs in our ear.) To minimize the difference between what these stimuli, Oculus requires all desktop-connected VR content to run at 90 frames per second.

Not my diagram. I would credit this but the original link is now a 404.

Unfortunately, after my first design pass, my game was running at around 15 to 30 FPS at worst on my mid-range Razer laptop. To hit 90 FPS, I had to use many optimization tricks, including:

Using object pools as I mentioned in my previous blog post.

Eliminating almost every unnecessary UI canvas object from my scene in Unity as they were constantly being recalculated, putting unnecessary stress on my CPU.

Eliminating almost every dynamic light in the scene and replacing it with lightmapping, which is essentially the practice of “painting in” a scene’s lights and shadows beforehand than simulating those at runtime.

However, the most impactful step for me was reducing my draw calls.

A draw call is the directions that a CPU (Central Processing Unit) gives to the GPU (Graphical Processing Unit) about what to render in a scene. Specifically, the draw call is the information that the GPU needs to render each object in the scene. While most computers’ GPUs do not struggle to execute these directions once received, preparing these directions puts significant strain on the CPU, which results in lag.

To use a filmmaking metaphor, you can imagine the CPU as a location scout and a GPU as a lightning-fast set-builder. In the context of the metaphor, the CPU is visiting the “real location” and sending instructions back to the GPU on what to build in the movie-set. The CPU/location scout looks at objects that make up the “real location” and communicates every visual detail about them to the GPU/set-builder, who recreates them. However, to extend the metaphor, the CPU/location-scout is using a slow fax machine, so sending these details to the GPU/set-builder takes a long time and can slow down the entire process. Thus, the more succinct the directions can be, the faster the GPU will be able to build the set. We’ll use this metaphor as a way of explaining some of these optimization techniques.

Below is a timelapse that shows a scene in Jukebox Beatdown being rendered one drawcall at a time.

To reduce my draw calls, I used two techniques: mesh-baking and texture-atlasing.

Mesh-baking is when a developer takes several meshes (3d models) in their level/scene and turns them into one mesh. If we bake three meshes into one, our CPU will now need to process one draw call for those three meshes instead of three. In the context of Jukebox Beatdown, we generally baked together most meshes that shared the same shader, which is code that dictates how an object reacts to light. Our floor, for example, was made of about sixty different meshes; we baked these into one object.

To continue our movie metaphor, now that the meshes are baked together, our CPU/location-scout can describe, for example, a group of ten stereos as a group of ten stereos rather than communicate information about each stereo one-by-one. Put another way, it’s the difference between counting bowls of rice versus counting individual grains of rice. Preparing and sending the directions is the bottleneck in this context, so using succinct instructions is paramount.

Texture-atlasing is the process of aggregating all a game’s textures onto one image file. If a project is not texture-atlassed, every texture in the game is contained within a unique image file. The problem with this setup is that as the number of unique images go up, the number of draw calls go up as well. So, in order to minimize the number of images that need to be sent by the CPU, developers will pack as many textures as they can onto one image or texture atlas. The GPU will then look at this atlas for every texture that it needs.

In our location-scouting metaphor, texture-atlasing would mean that instead of taking pictures of every scene in our metaphorical “real-location” and sending them through the slow fax machine, our CPU is instead sending one page that contains all the pictures.

A texture atlas for the buildings in a virtual city.

All these changes together helped us reach our technical performance goals. Now, it was time to make sure our project’s marketing was as engaging as the project itself.

Producing

The Oculus Launchpad program is highly competitive, with only three to five grants awarded to around fifty entries. I knew that some of the other entrants had teams that were five or more people strong (compared to my two) and had significantly larger budgets than I did, so I knew my project needed to look as polished and exciting as possible.

At the Oculus headquarters in San Francisco area for the presentation day.

For my project to receive the grant, it had look professional. I knew that I had the skills to reach the required level of polish as a designer and a programmer, but maybe not as a voice-actor, graphic designer, or 3D modeler. Even if I did have the skills for those later responsibilities, I knew I didn’t have the time to do them all.

I had a $200 and a month to get the project ready for Oculus.

To ensure that I would get everything I needed by the grant deadline, I made a list of what features and assets would constitute the vertical slice of Jukebox Beatdown and then planned backwards from that date (February 28th). I then prioritized each of these items by how important they were and how much lead time they would need in order to be completed. My scrum training came in handy here.

From there, to decide which items I would outsource, I took a “time is money” approach. I estimated how long each item would take in hours if I did them myself and then multiplied that number by my hourly pay. I then compared how much it would cost to pay someone to do the same job on Fiverr. When a task was significantly cheaper to due via Fiverr, I outsourced it.

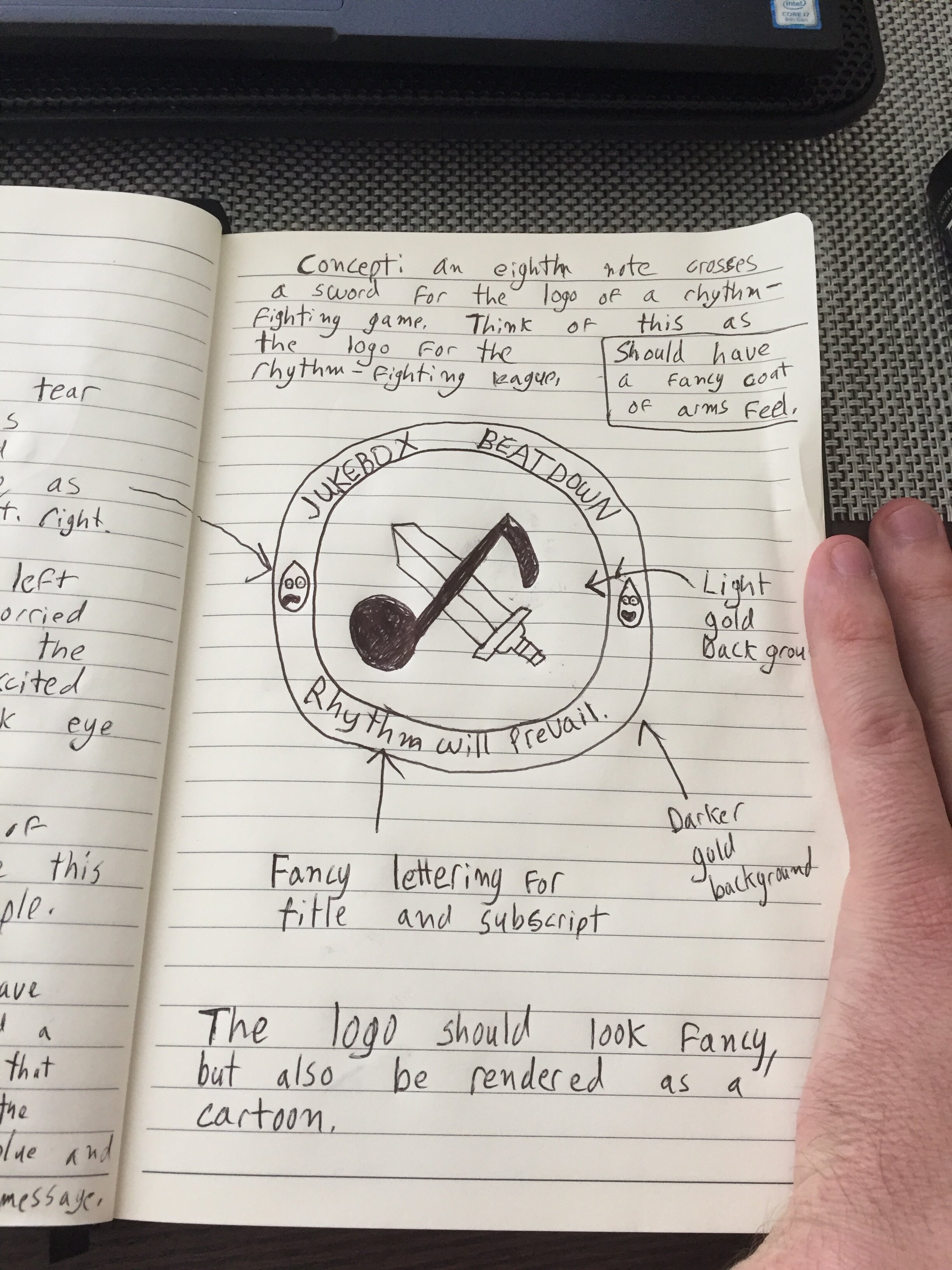

Ultimately, I ended up outsourcing a considerable amount of work, including the voice-acting, poster design, logo design, and character artwork. I spent $200 between several vendors to get all these items. This amount of work took about two weeks to be delivered.

In the gallery below, you can see the final artwork followed by the original sketch I sent to the Fiverr artists:

To do so, I started using Fiverr, a freelancing website, which was a great experience. If you decide to use Fiverr for your work, consider using my referral code, which will give you 20% off your first order: http://www.fiverr.com/s2/5b56fb53eb

Full disclosure: I will receive Fiverr credits if you sign up with the above link as part of their referral program. I am not affiliated with them in any other way. My opinions are my own.

Presentation

With my game built, optimized, and marketed, it was time to present to fly to Menlo Park, CA and present the game:

next Steps (Several Weeks Later)

Unfortunately, Jukebox Beatdown did not win any of the Launchpad grants. However, I am very happy with the project and will be polishing the vertical slice for release on itch.io so that others can play it.

Amongst other small tweaks, this next sprint will be about:

Adding more attacks to Funky Franny’s moveset.

Adding a system that ranks the player’s performance within the boss battle like this.

Giving the game more “juice,” which means to make the game feel more visceral.